RHYS HUNTER PORTFOLIO

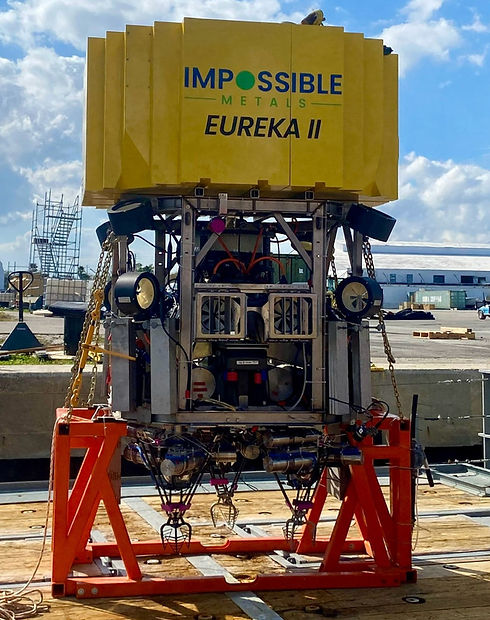

Eureka II Autonomous Underwater Vehicle

Overview:

This vehicle is designed to be deployed in up to 6km of ocean, where it autonomously navigates to the seafloor and collects nodules (small round mineral deposits) without disturbing the seafloor.

Technologies Used:

A Large array of sensors - GNSS, Fiber IMU, DVL, sonar, turbidity sensing, USBL, Stereo Cameras. ROS2 with custom navigation stack, Eclipse Cyclone DDS. Foxglove visualisation, docker deployments, gitlab version control and CI. Jetson Orin and Intel RealSense computer vision system.

Challenges:

-

Complex system for a single vehicle, ~15 computers, ~30 actuators, ~20 sensors, ~ 1 GB/s of data to store.

-

Multiple communication mediums: acoustic communication, fibre, radio, satellite.

-

Underwater application challenges - electrical noise interference, ground faulting, depth proofing, remote nature of deployments.

-

Less than 1 cm localisation required to accurately map and pick nodules requiring sensor fusion and advanced tuning.

-

Limited hardware choices in extreme environment

My Contributions:

Heavily involved in all areas of the vehicle including:

-

Headed testing and deployments, often worked on electrical and hardware issues when they arose.

-

Wrote control software and device drivers.

-

Created a CI/CD and deployment system with automated data recovery, remote access.

-

Adapted a simulation environment to suit specific vehicle application

-

Chief problem solver - deep dove into complex issues like intermittent communication failures and localisation discrepancies.

Traxx Autonomous Vineyard Weeder/Sprayer

Overview:

This autonomous robot is used in vineyards in France to combat weeds. Using RTK GNSS for localization this machine travels autonomously up and down rows either pulling plows or spraying.

Technologies Used:

RTK GNSS & IMU localization, LIDAR safety system, hydraulic and electric actuators, Nvidia Jetson based controls platform, Linux + ROS 2 operating system, cloud-connected and app controlled.

Challenges:

-

Tight navigation tolerances

-

Machines located in France - remote development challenges

-

Quick development cycle

My Contributions:

-

Behavior tree contributions

-

Speed control contributions

-

Obstacle detection contributions

-

Simulator improvements

Agerris Digital Farmhand

Overview:

This autonomous robot serves as a low cost platform for conducting various tasks in the vegetable farming industry. Navigating by RTK GNSS with a stereo camera for obstacle detection, the machine`s main selling point is a machine learning platform that can characterize crops and weeds, and use targeted weeding and spraying implements.

Technology Used:

RTK GNSS & IMU localization, stereo camera safety system, electric actuators, intel up2 controller, Nvidia Jetson based machine learning system, two downfacing machine learning cameras, LoRa radio link, multi-machine operation, app-controlled.

Challenges:

-

Low-cost platform

-

Use of non-industrial grade components

-

Challenging environment - busy, dirty and diverse

-

Machine learning in a changing environment

My Contributions:

-

Responsible for on-farm update testing, bug documenting, bug fixes

-

Liaising with customers and shareholders

-

System to identify crop line locations relative to the machine to enable accurate tool platform positioning

Unreleased Self-Propelled Agricultural Machine

Overview:

This machine performs a shuttle service in an agricultural environment. An operator will call the machine and it will plan a path to their location, after which it will plan a path to an unload location and use perception-based navigation to position accurately for an unload sequence.

Technology Used:

GNSS, Lidar, mesh radio connection, Nvidia Jetson based controller, Linux + ROS 2 control system.

Challenges:

-

Over 30t vehicle weight and 20 km/h + speed

-

Multi-machine operation

-

Task dependent perception based navigation

-

Safely operating in a busy environment

My Contributions

-

Obstacle detection contributions

-

Speed control contributions

-

Radio message compression contributions

-

Testing many new features

-

Simulator improvements

Unreleased ATV based project

Overview:

This project involves a route-following delivery robot for use in remote environments. The loading and unloading sequences use perception-based navigation.

Technology Used:

RTK GNSS, Stereo camera, Lidar, Radar, Nvidia Jetson based controller, Linux + ROS 2 operating system.

Challenges:

-

Perception-based loading and unloading

-

Loading sequence in a GNSS denied environment

-

Completely unsupervised operation

My Contribution:

-

Interfacing between steering, brake, accelerator, shift and OEM ECU and the rest of the system

-

Electrical system design and prototyping

-

Machine testing